Big Data is a term that describes huge amounts of data that are collected, stored, and analyzed in order to obtain useful information and discover new knowledge.

What is BIG DATA – concept and definition in simple words.

In simple terms, Big Data is simply a large amount of information that is collected from various sources and processed using special tools.

Imagine a huge library with thousands of books, where each page contains some data. Big Data is like a whole library that we analyze to find important knowledge and answers to questions.

The main features of Big Data can be compared to the three Vs: Volume, Variety, and Velocity. Volume is the amount of information collected, variety is the different types of data, and velocity is how fast we can collect and process this data.

Big Data is used in many industries, such as medicine, finance, marketing, and others, to better understand the situation, identify dependencies, and support decision-making. Thanks to Big Data, we can open up new opportunities and improve the quality of our lives.

Pioneers in the use of Big Data.

Big Data was first applied in some well-known projects that showed the potential of this technology and changed the direction of development of various industries.

The SETI@home project: The Search for Intelligent Life in the Universe.

One of the earliest examples of Big Data usage is the SETI@home project, launched in 1999. Its goal was to analyze huge volumes of radio signals from space to detect possible traces of intelligent life. As part of this project, the data was distributed among hundreds of thousands of computers from private users, which became the prototype of modern cloud solutions for Big Data processing.

Research of the human genome: A revolution in genetics.

In the 2000s, a well-known event in science took place – the human genome decoding project. It required the analysis and storage of a huge amount of genetic information. Thanks to the use of Big Data, databases of genetic sequences were created, which became the basis for numerous scientific studies and the development of personalized medicine.

Google: Innovations in search technology.

Google, the world leader in search engines, has also been using Big Data since its founding in 1998. Thanks to the analysis of huge amounts of information from the Internet, Google was able to improve its search algorithms and create various services such as Google Maps, Google Analytics, and Google Ads, which revolutionized the world of digital marketing, advertising, and analytics.

Netflix: Personalization of recommendations in streaming services.

The video streaming service Netflix has become another pioneer in the use of Big Data. They started analyzing large amounts of data about their users and their viewing habits back in the 2000s. The application of machine learning algorithms allowed Netflix to create personalized recommendations for each user, increasing service satisfaction and ensuring audience loyalty.

These and other early use cases of Big Data inspired other companies and organizations to explore the possibilities of this technology. Over time, Big Data has become a key tool for analyzing data in many industries, from healthcare to finance, and continues to open new horizons in the development of the modern world.

The origin and popularity of the term Big Data.

To understand how the name “Big Data” came to be and how it has gained popularity in the tech world, let’s dive into the history of this concept.

- The origin of the term Big Data. The term “Big Data” appeared in the late 1990s, but some sources claim that it originated in the 1970s. The first person to use the term was John Musgay, a computer science expert who called “big data” one of the main challenges of modern computer science.

- The term gained popularity. In the early 2000s, with the development of the Internet and the initial implementation of Big Data technologies, the term became increasingly well-known in the technical community. In 2011, an important event took place – the prominent analytical center Gartner recognized Big Data as one of the key strategic technologies, which contributed to the growth of interest in this topic.

- Big Data is gaining momentum. Since the early 2010s, when many companies began to implement Big Data in their processes, a revolution in the use of these technologies has taken place. Scientists, analysts, and business professionals have realized the importance of Big Data for innovation, competitiveness, and the creation of new opportunities.

Thus, the term “Big Data” has gradually become synonymous with success, analytical skills and strategic development in the modern technological world.

Key features of Big Data.

To better understand the essence of Big Data, let’s look at five main characteristics that distinguish big data from traditional information processing:

- One of the most obvious features of Big Data is a large amount of data that is constantly growing. This refers to the terabytes, petabytes, exabytes, and even zettabytes of information that are generated daily by various sources, including the Internet, social media, sensors, mobile devices, etc.

- Big Data includes different types of data: structured, semi-structured, and unstructured. It can be numbers, text, images, audio and video files, geolocation data, etc. Processing such a wide variety of data requires special methods and tools.

- The speed at which data is collected, processed, and analyzed also plays an important role in Big Data. High rates of data processing allow companies to respond to changes in real time and make appropriate decisions faster.

- An important characteristic of Big Data is data reliability, which reflects the accuracy, consistency, and relevance of information. Incorrect, inconsistent, or outdated information can lead to erroneous conclusions and strategic decisions.

- The ultimate goal of big data analysis is to identify valuable information that can help companies optimize their processes, provide competitive advantages, and accelerate innovation. The value of Big Data lies in the ability to identify connections, trends, and patterns that were previously hidden or invisible.

The impact of Big Data characteristics on different industries.

Given the above characteristics, it is easy to see why Big Data has become a key technology in many industries. The volume, diversity, speed, reliability, and value of data affect the approach to information analysis, which opens up new opportunities for businesses.

Examples of Big Data’s impact on various industries:

- In marketing and advertising: Big Data helps companies better understand the needs of their customers, develop more effective communication strategies, and track the results of advertising campaigns.

- In the financial sector: Big data is used to detect fraud, credit risk, and to improve algorithmic trading.

- In healthcare: Big data analysis can help detect epidemics and pandemics, improve patient experience, and accelerate the development of new drugs and therapies.

- In science: Big Data plays an important role in large-scale scientific research, such as astronomy, genomics, climatology, etc.

Given the broad impact of Big Data on various industries, it can be said that the technology has become an important tool for companies, organizations, and scientific institutions in the modern world. By analyzing big data, stakeholders can ensure more efficient decision-making, discover new opportunities, and track their progress in real time.

To ensure success in using Big Data, it is necessary to have not only specialists who understand these characteristics, but also the necessary technical resources, such as analytical software, powerful computers, and appropriate algorithms to process large amounts of diverse data.

Conclusion: The key features of Big Data, such as volume, variety, speed, reliability, and value, have a significant impact on various industries in the modern world. The implementation of Big Data in various sectors can provide a number of benefits, including increased efficiency, new opportunities, and competitive advantage. To achieve optimal results, it is important to understand the features of big data and have the proper technical resources and qualified specialists.

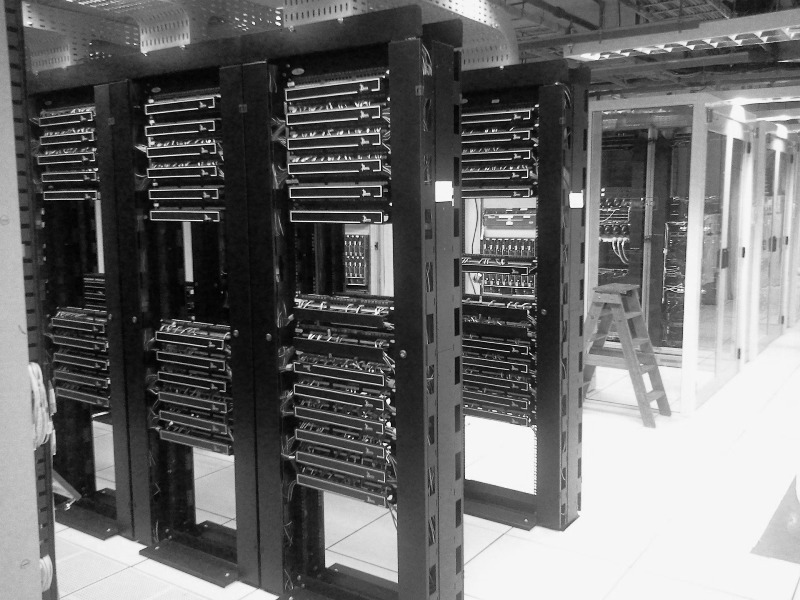

The internal mechanism of Big Data: Collecting, processing and analyzing big data.

An important aspect of Big Data is the processes of data collection, processing, and analysis. Data is collected from various sources, such as social media, IoT devices, sensors, and many others. Once collected, the data needs to be processed to filter, cleanse, and structure it for further analysis.

The role of data storage, processing systems, and analytical tools.

To store and process big data, special data storage and information processing systems are used, such as Hadoop, Spark, NoSQL databases, and others. These technologies allow storing and processing large volumes of various data quickly and efficiently.

As for analytical tools, there are many software solutions that help companies study trends, identify patterns, and draw valuable insights from big data. They include tools for text processing, data visualization, and machine learning.

The importance of data privacy and security.

Given the volume and sensitivity of information processed in Big Data, much attention is paid to data privacy and security. Ensuring the protection of user data and compliance with personal data processing laws is critical for any organization working with big data. This includes the use of various methods of encryption, authentication, authorization, and auditing.

Data integration and data quality assurance.

Another important aspect of working with big data is data integration and data quality assurance. Data integration means combining different data sources into a single system that can be easily processed and analyzed. Data quality assurance includes filtering out inaccuracies, duplicates, and missing values, which helps improve the accuracy of big data analysis and decision-making.

The future of Big Data and its impact on society.

Big Data continues to evolve, and as it does, so does its impact on various aspects of society. From healthcare to finance, from education to government organizations, the use of big data is opening up new opportunities to discover knowledge and improve people’s lives.

It is expected that in the future, the use of big data will become even more widespread and integrated into people’s daily lives. This may lead to the creation of new products and services based on individual needs and preferences of users, as well as to the strengthening of security and transparency of data use.

Basic Big Data technologies and their ecosystem: Hadoop, Spark, and NoSQL databases.

Big Data uses a variety of technologies to efficiently store, process, and analyze large amounts of information. Some of the most popular technologies used in this field include Hadoop, Spark, and NoSQL databases. Let’s take a look at the role and functionality of these technologies in the process of big data processing.

Hadoop: distributed big data processing.

Hadoop is an open source software that allows you to store and process large data sets on clusters of cheap servers. It is based on the Hadoop Distributed File System (HDFS) model, which allows you to distribute data volumes between cluster nodes. The main components of Hadoop are HDFS and MapReduce, which is responsible for parallel processing of data on different cluster nodes.

Spark: high-speed data processing and analysis.

Spark is another open source big data project that is designed for high-speed, real-time data processing and analysis. It uses its own cluster manager and can also work with Hadoop or Mesos. Spark provides interfaces for various programming languages such as Scala, Java, and Python, and includes libraries for machine learning, graphical analysis, and streaming data processing.

NoSQL databases: flexibility and scalability.

NoSQL databases are a group of databases that differ from traditional relational databases in their flexibility and scalability. They allow you to store and process unstructured and semi-structured data that is common in Big Data. NoSQL databases can be of different types, including key-value, column, document, and graph databases. Famous examples of NoSQL databases include Cassandra, MongoDB, and Couchbase.

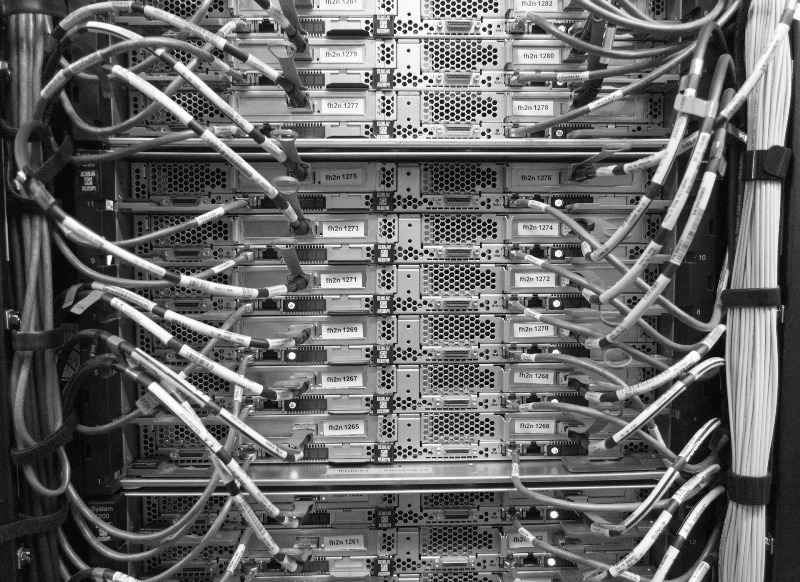

Interaction and integration of Big Data technologies.

It’s important to note that these technologies often interact and integrate to create comprehensive Big Data solutions. For example, Hadoop and Spark can work together to process and analyze big data using HDFS as the basis for data storage. NoSQL databases can be used to store and process unstructured data, which can then be analyzed using Spark or Hadoop.

Big Data ecosystem.

The Big Data ecosystem consists of various components that work together to ensure efficient storage, processing, and analysis of big data. This includes not only the aforementioned technologies, but also other tools and solutions, such as ETL tools (Extract, Transform, Load), data management systems, analytical platforms, cloud computing infrastructure and services.

In general, Hadoop, Spark, and NoSQL databases are key technologies that help organizations efficiently store and process big data. Understanding their roles and functionality will help IT professionals better utilize the potential of big data and develop effective solutions for analyzing and processing information.

Adapting and choosing the right Big Data technologies.

Since every organization has its own needs and requirements for processing big data, it is important to adapt technologies and tools to specific situations. Choosing the right combination of Big Data technologies depends on a number of factors, such as the amount of data, types of data, required processing speed, resource availability, and budget.

To successfully implement Big Data projects, professionals must carefully analyze their needs, consider the limitations and capabilities of different technologies, and keep abreast of new developments and trends in the field of Big Data.

Training and development of competencies in the field of Big Data.

As big data technologies are constantly evolving, it is important for IT professionals to constantly improve their skills and knowledge. There are various courses, certification programs, and other resources that can help professionals learn the basics of Big Data technologies, develop new solutions, and maintain their competencies at a high level.

Examples of the use of Big Data in the real world.

The use of big data affects various sectors of the economy, from healthcare and finance to marketing. Let’s look at specific examples of Big Data application in various industries and learn about the benefits and challenges associated with the use of big data.

- Healthcare: Personalization of healthcare. In the healthcare sector, the use of big data allows collecting and analyzing patient information, such as genetic data, health data, and medical records, to develop personalized treatment plans. This can help in increasing the efficiency of healthcare services, reducing costs, and improving the quality of patient care.

- Finance: Better risk management and fraud detection. In the financial sector, big data analysis helps organizations better manage risk and detect fraud. By analyzing transactions, credit ratings, and other data, financial institutions can develop risk models that help them make informed lending and investment decisions.

- Marketing: Targeted advertising and analysis of consumer behavior. In marketing, the use of big data allows companies to better understand consumer demand and market trends. By analyzing customer information, feedback, and social media behavior, companies can develop targeted advertising campaigns and offers that better meet consumer needs. Big data analysis also helps to identify new market niches and business development opportunities.

Benefits and challenges of using Big Data.

The use of big data offers a number of benefits for various sectors of the economy. Some of them include:

- Improving the effectiveness of decisions: Big Data allows for in-depth data analysis, which contributes to better decision-making and optimization of business processes.

- More accurate forecasting: The application of analytical models on big data can help in predicting market trends, product demand, and customer needs.

- Innovative development: Big data drives innovation, helping organizations discover new opportunities and development strategies.

However, along with the benefits, the use of big data also has its challenges:

- Ensuring data privacy and security: Organizations need to be especially careful about storing and processing large amounts of data to avoid privacy breaches and data loss.

- Infrastructure and data processing costs: Storing and processing big data can be costly, requiring significant investments in hardware and software, as well as the appropriate level of staff expertise.

- Processing unstructured data: Much big data comes in unstructured form, such as text messages, images, and videos. Organizations need to develop new methods and algorithms to effectively process and analyze such data.

- Ethical issues: The use of big data can violate users’ privacy and increase privacy risks. Organizations need to consider ethical aspects when collecting, processing and using big data.

The use of big data can bring significant benefits to various industries, such as healthcare, finance, and marketing. However, the successful use of big data requires an understanding of the relevant technologies, the ability to develop effective solutions for analyzing and processing data, and consideration of privacy, security, and ethical challenges.

Preparing for the future: New trends and innovations in big data technology.

There are significant changes and developments in the field of big data, including the emergence of new machine learning algorithms, artificial intelligence, and cloud storage solutions. These innovations are opening up new opportunities for more efficient processing and analysis of big data, which gives companies the opportunity to gain new strategic advantages.

The importance of developing a data-driven culture in organizations.

Ensuring success in the big data era requires organizations to create a culture that supports and fosters the use of data to drive decision-making at all levels. This includes training and educating employees, providing access to the necessary tools and resources to analyze data, and creating transparent processes for sharing knowledge and best practices.

Organizations should actively integrate big data into their strategy and planning to optimize workflows, increase efficiency, and improve results. Tracking emerging trends, developing competencies, and interacting with other businesses that are also using big data will help organizations stay at the forefront of this revolutionary technology and ensure future success.

Interconnection: Big Data, Artificial Intelligence, Neural Networks, and Blockchain.

- Big Data and artificial intelligence. Big data and artificial intelligence (AI) are closely related, as AI uses big data to learn and identify patterns. Thanks to large amounts of data, AI can learn and evolve, becoming more and more powerful in prediction and analysis. Accordingly, the use of AI is becoming more widespread in various industries, including medicine, finance, and marketing.

- Big Data and neural networks. Neural networks are a type of machine learning algorithms that attempt to mimic the human brain to solve complex problems. Using big data, neural networks can learn to identify patterns and draw conclusions even if the data contains noise or incomplete information. Neural networks are especially useful in areas such as computer vision, natural language processing, and recommender systems.

- Big Data and blockchain. Blockchain is a decentralized accounting technology that provides a high level of security, transparency, and independence from a single controlling body. Due to the blockchain’s capabilities, it can be used to store and process big data, ensuring the reliability and anonymity of information. This opens up new opportunities in a number of industries, such as finance, logistics, healthcare, etc.

Conclusion.

Understanding big data is extremely important in today’s world, as it plays a key role in solving the most pressing problems and creating new opportunities. The introduction of big data in various fields, such as healthcare, finance, marketing, and many others, allows for improved productivity, efficiency, and innovation.

At the same time, big data knowledge and skills are becoming increasingly important as specialists in this area are becoming more and more sought after in the labor market. Mastery of big data technologies such as Hadoop, Spark, and NoSQL enables professionals to ensure high competitiveness of their organizations.

It is also important to note the interconnection of big data with other advanced technologies, such as artificial intelligence, neural networks, and blockchain. The joint use of these technologies can open up new horizons for the development of society and help solve problems that were previously considered insoluble.

FAQ (Frequently Asked Questions):

Big Data refers to large volumes of data that are collected, stored, and analyzed to produce useful information. This data can be structured, semi-structured, or unstructured.

Examples of Big Data include social media data, server logs, data from Internet of Things (IoT) sensors, meteorological data, and much more.

The term “Big Data” was officially coined in 2001, when the Meta Group (now Gartner) published a report analyzing the growth of data and its impact on business processes.

The main characteristics of Big Data include Volume, Velocity, Variety, Veracity, and Value.

The large amounts of data that are collected are commonly referred to as “Big Data” or big data.

A variety of technologies are used to process big data, such as Hadoop, Spark, NoSQL databases, machine learning and artificial intelligence algorithms.